An effective Kubernetes backup solution must be able to preserve all the underlying data and configurations of an application. Failure to do so can result in slow and inconsistent restores during disaster recovery.

Container granularity is essential, but it’s also important to backup and restore applications at the namespace level for ease of moving them between different environments for migrations, upgrades, or dev to prod workflows.

High Availability

For Kubernetes applications to be highly available, they must be backed up regularly. Backups can be critical to keeping an application running in case of a data breach, hardware failure, or system crash. Unfortunately, many enterprise backup and recovery tools weren’t designed for Kubernetes and had difficulty backing up containerized applications.

This is because traditional backup tools need help understanding a containerized application and only focus on backing up individual VMs, servers, or disks. Additionally, many of these tools only back up a single namespace or PersistentVolumeClaims, and do not understand how a namespace is structured or how to backup all the other elements that make up the application (i.e., etc., stateful sets, config maps).

A Kubernetes backup solution needs to be able to back up all of the components of an application as a single unit. It must also be able to provide the same functionality as a standard backup solution, including backup scheduling, retention, encryption, and tiering.

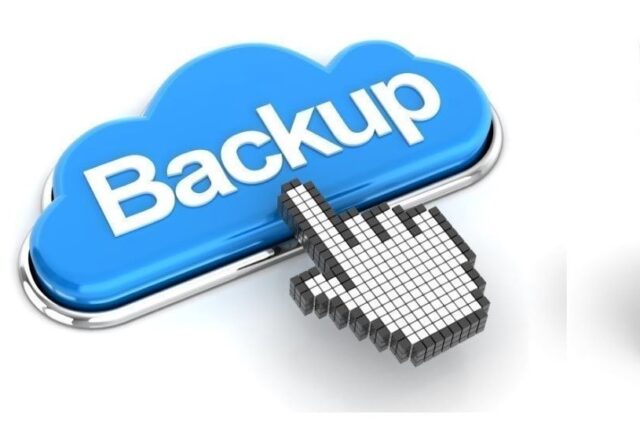

Fortunately, there are now solutions that can back up Kubernetes applications. These include a native open-source tool that provides backup, disaster recovery, and migration capabilities for K8s. This tool works with LINBIT SDS to manage storage volume snapshots and backs up all the essential elements of a Kubernetes application, including its namespace deployment and PersistentVolumeClaims. In addition, some can perform in-place recovery of a backed-up namespace deployment or specific PersistentVolumeClaims within the namespace.

Scalability

When you speak to IT professionals for over a minute, the conversation will likely turn to containers and orchestration. It’s no surprise that these topics are at the top of the wishlist for most organizations. Yet, operationalizing containers at scale isn’t for weekend enthusiasts – it requires planning, orchestration, and backup/restore.

Kubernetes provides the ability to efficiently distribute workloads and natively increase availability through replication controllers, which automatically create identical pod replicas. This enables a cluster to adapt to changing application loads without disrupting existing applications. To protect these replication controllers and the pods they manage, backup, and restore must be built into the same workflow as application deployment and management.

Kubernetes applications store data in configuration files and databases like any other application. While backing up the underlying VMs is relatively simple, containerized applications are much more complex. A backup solution is not “application-aware” may fail to capture all necessary information and lead to inconsistent restores.

Effective Kubernetes backup and restore namespace requires an “application-aware solution.” It needs to know which files are part of the application and be able to preserve them at a granular level. It should also be able to capture configuration changes while a backup occurs. This is known as “crash consistent” backup and is critical for maintaining consistency across a recovery scenario.

Reliability

The ability to reliably restore applications to a known state in the event of a data loss or system failure is crucial for organizations. This can be the difference between a business being up and running with minimal downtime and missing valuable services or shutting down the entire application and losing critical business information.

However, traditional backup and recovery tools are not designed for Kubernetes. This can lead to inconsistent backups and restores across different environments. Even if an organization uses multiple Kubernetes-native tools to perform backup and recovery, it introduces significant complexity and risk.

A vital requirement of a Kubernetes-native backup and recovery solution is to be application-based rather than VM-based. This is because Kubernetes applications are highly dynamic and can scale up or down rapidly to meet demand. Additionally, a single application in production may consist of hundreds of components, including containers/pods, ConfigMaps, certificates, secrets, and volumes running on multiple machines holding different configurations.

The solution must be able to back up all these elements at the granular level and capture any pending writes that aren’t flushed to disk. This is the only way to ensure a successful recovery during a data loss or disaster. Additionally, the backup and recovery solution must have visibility into the environment to identify critical vulnerabilities and misconfigurations before threat actors exploit them.

Security

The security of your containers and applications is critical to the Kubernetes environment. This includes building images free of vulnerabilities, ensuring that deployments follow best practices, and securing runtime threats. Please do all of these to avoid exposing your business to data loss and a potential loss of reputation.

Unfortunately, most organizations aren’t backing up Kubernetes with native tools, if they’re doing it at all. In many cases, teams use general-purpose backup and recovery tools that don’t understand container environments well, leading to limited features and an inability to manage the full complexity of the Kubernetes environment.

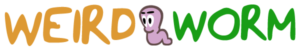

A proper Kubernetes backup requires a solution that understands how the cluster works, including how it stores data in persistent volumes. In addition, it needs to be able to back up the etc. configuration database, the primary storage containing container images, and the constant volume references in YAML files.

The right tool also needs to detect malicious activity in the cluster by observing active network traffic and comparing it against what’s expected based on your Kubernetes networking policies. This can include following 5-tuple data (Source IP, Source Port, Destination IP, Destination Port, and Protocol) or leveraging machine learning to identify unusual behavior, such as a sudden increase in network latency between two pods.